26TH INTERNATIONAL CONFERENCE ON COMPUTING IN HIGH ENERGY & NUCLEAR PHYSICS (CHEP2023)

Norfolk Waterside Marriott

Thank you all for a very successful CHEP 2023 in Norfolk! It was a pleasure to have you all here. Proceedings have been published and recordings of most plenary sessions are available.

We look forward to seeing you all at CHEP 2024 in Krakow, Poland (Oct 19--25, 2024).

The CHEP conferences address the computing, networking and software issues for the world’s leading data‐intensive science experiments that currently analyze hundreds of petabytes of data using worldwide computing resources. The Conference provides a unique opportunity for computing experts across Particle and Nuclear Physics to come together to learn from each other and typically attracts over 500 participants. The event features plenary sessions, parallel sessions, and poster presentations; it publishes peer-reviewed proceedings.

You can find additional information through the Conference Website and the links on the right sidebar.

CHEP 2023 Proceedings

The CHEP 2023 Proceedings have been published through the EPJ Web of Conferences. Thank you very much to everyone who contributed!

-

-

Registration

-

Plenary Session: Welcome / Highlighted Keynotes Norfolk Ballroom III-V

Norfolk Ballroom III-V

Norfolk Waterside Marriott

235 East Main Street Norfolk, VA 23510Convener: Dodge, Gail (Olde Dominion University)-

1

Welcome and Introduction to CHEP23Speakers: Boehnlein, Amber (JLAB), Dodge, Gail (Old Dominion University), Henderson, Stuart (Jefferson Lab), Scott, Bobby (United States Congressman)

-

2

Keynote: Evolutions and Revolutions in Computing: Science at the Frontier

The world is full of computing devices that calculate, monitor, analyze, and control processes. The underlying technical advances within computing hardware have been further enhanced by tremendous algorithmic advances across the spectrum of the sciences. The quest -- ever present in humans -- to push the frontiers of knowledge and understanding requires continuing advances in the development and use of computation, with an increasing emphasis on the analysis of complex data originating from experiments and observations. How this move toward data intensive computing affects our underlying processes in the sciences remains to be fully appreciated. In this talk, I will briefly describe how we arrived at this point, and also give a prospective toward the end of the talk.

Speaker: Dean, David (TJNAF) -

3

Keynote: Future Trends in Nuclear Physics Computing

In today's Nuclear Physics (NP), the exploration of the origin, evolution, and structure of the universe's matter is pursued through a broad research program at various collaborative scales, ranging from small groups to large experiments comparable in size to those in high-energy physics (HEP). Consequently, software and computing efforts vary from DIY approaches among a few researchers to well-organized activities within large experiments. With new experiments underway and on the horizon, and data volumes rapidly increasing even at small experiments, the NP community has been considering the next generation of data processing and analysis workflows that will optimize scientific output. In my keynote, I will discuss the unique aspects of software and computing in NP and explore how the NP community can strengthen collective efforts to chart a path forward for the next decade. This decade promises to be an exciting one, with diverse scientific programs ongoing at facilities such as CEBAF, FRIB, RHIC, and many others. I will also demonstrate how this path informs the software and computing at the future Electron-Ion Collider.·

Speaker: Diefenthaler, Markus (Jefferson Lab)

-

1

-

10:30 AM

AM Break Hampton Roads Ballroom (3rd floor)

Hampton Roads Ballroom (3rd floor)

Norfolk Waterside Marriott

235 East Main Street Norfolk, VA 23510 -

Track 1 - Data and Metadata Organization, Management and Access: Storage Norfolk Ballroom III-V

Norfolk Ballroom III-V

Norfolk Waterside Marriott

235 East Main Street Norfolk, VA 23510Conveners: Barisits, Martin (CERN), Lassnig, Mario (CERN)-

4

dCache: storage system for data intensive science

The dCache project provides open-source software deployed internationally to satisfy

ever more demanding storage requirements. Its multifaceted approach provides an integrated

way of supporting different use-cases with the same storage, from high throughput data

ingest, data sharing over wide area networks, efficient access from HPC clusters and long

term data persistence on a tertiary storage. Though it was originally developed for the

HEP experiments, today it is used by various scientific communities, including astrophysics,

biomed, life science, which have their specific requirements. With this contribution we

would like to highlight the recent developments in the dCache regarding integration with

CERN Tape Archive (CTA), advanced metadata handling, bulk API for QoS transitions, RESTAPI

to control interaction with tape system and the future development directions.Speaker: Mr Litvintsev, Dmitry (Fermilab) -

5

Erasure Coding Xrootd Object Store

XRootD implemented a client-side erasure coding (EC) algorithm utilizing the Intel Intelligent Storage Acceleration Library. At SLAC, a prototype of XRootD EC storage was set up for evaluation. The architecture and configuration of the prototype is almost identical to that of a traditional non-EC XRootD storage behind a firewall: a backend XRootD storage cluster in its simplest form, and an internet facing XRootD proxy that handles EC and spreads the data stripes of a file/object across several backend nodes. This prototype supports all functions used on a WLCG storage system: HTTP(s) and XRootD protocols, Third Party Copy, X509/VOMS/Token, etc. The cross-node EC architecture brings significant advantages in both performance and resilience: e.g. parallel data access, tolerance of downtime and hardware failure. This paper will describe the prototype’s architecture and its design choices, the performance in high concurrent throughputs and file/object operations, failure modes and their handling, data recovery methods, and administration. This paper also describes the work that explores the HTTP protocol feature in XRootD to support data access via industry standard Boto3 S3 client library.

Speaker: Yang, Wei (SLAC National Accelerator Laboratory) -

6

POSIX access to remote storage with OpenID Connect AuthN/AuthZ

INFN-CNAF is one of the Worldwide LHC Computing Grid (WLCG) Tier-1 data centers, providing support in terms of computing, networking, storage resources and services also to a wide variety of scientific collaborations, ranging from physics to bioinformatics and industrial engineering.

Recently, several collaborations working with our data center have developed computing and data management workflows that require access to S3 storage services and the integration with POSIX capabilities.

To accomplish this requirement in distributed environments, where computing and storage resources are located at geographically distant physical sites, the possibility to locally mount a file system from a remote site to directly perform operations on files and directories becomes crucial.

Nevertheless, the access to the data must be regulated by standard, federated authentication and authorization mechanisms, such as OpenID Connect (OIDC), which is already adopted as AuthN/AuthZ mechanism within WLCG and the European Open Science Cloud (EOSC).

Starting from such principles, we evaluated the possibility to regulate data access by integrating JSON Web Token (JWT) authentication, provided by INDIGO-IAM as Identity Provider (IdP), with solutions based on S3 (for object storage) and HTTP (for hierarchical storage) protocols.

In particular, in regard to S3 data exposition, we integrated MinIO and CEPH RADOS Gateway with s3fs-fuse, providing the needed custom libraries to mount an S3 bucket via FUSE by preserving the native object format for files. Both solutions support Secure Token Service (STS), providing a client with temporary credentials to perform a given operation on a storage resource by checking the value of a JWT claim associated with the request.

Native MinIO STS does not support IAM JWT profile, thus we delegated STS service to Hashicorp Vault in the case of MinIO.

RADOS Gateway is an object storage interface for Ceph. It provides a RESTful S3-compatible API and a feature for integration with OIDC IdP. Access tokens produced for OIDC clients can be used by the STS implemented within RADOS Gateway for authorizing specific S3 operations.

On the other hand, HTTP data access has been managed by using Rclone and WebDAV protocol, to mount a storage area via INDIGO-IAM token authentication. In this case the storage area is exposed via HTTP by using the StoRM-WebDAV application, but the solution is general enough to be used with other HTTP data management servers (e.g. Apache, NGINX).

In such respect, a comparison between the performances yielded by S3 and WebDAV protocols has been carried out within the same Red Hat OpenShift environment, in order to better understand which solution is most suitable for each of the use cases of interest.Speaker: Dr Fornari, Federico (INFN-CNAF) -

7

Enabling Storage Business Continuity and Disaster Recovery with Ceph distributed storage

The Storage Group in the CERN IT Department operates several Ceph storage clusters with an overall capacity exceeding 100 PB. Ceph is a crucial component of the infrastructure delivering IT services to all the users of the Organization as it provides: i) Block storage for the OpenStack infrastructure, ii) CephFS used as persistent storage by containers (OpenShift and Kubernetes) and as shared filesystems by HPC clusters, and iii) S3 object storage for cloud-native applications, monitoring, and software distribution across the WLCG.

The Ceph infrastructure at CERN has been rationalized and restructured to offer storage solutions for high(er) availability and Disaster Recovery / Business Continuity. In this contribution, we give an overview of how we transitioned from a single RBD zone to multiple ones enabling Storage Availability zones and how RBD mirroring functionalities available in Ceph upstream have been hardened. Also, we illustrate future plans for storage BC/DR including backups via restic to S3 and Tape, replication of objects across multiple storage zones, and the instantiation of clusters spanning different computing centres.

Speaker: Bocchi, Enrico (CERN) -

8

ECHO: optimising object store data access for Run-3 and the evolution towards HL-LHC

Data access at the UK Tier-1 facility at RAL is provided through its ECHO storage, serving the requirements for the WLGC and increasing numbers of other HEP and astronomy related communities.

ECHO is a Ceph-backed erasure-coded object store, currently providing in excess of 40PB of usable space, with frontend access to data provided via XRootD or gridFTP, using the libradosstriper library of Ceph.

The storage must service the needs of: high-throughput compute, with staged and direct file access passing through an XCache on each workernode; data access to compute running at storageless satellite sites; and, managed inter-site data transfers using the recently adopted HTTPS protocol (via WebDav), which includes multi-hop data transfers to and from RAL’s newly commissioned CTA tape endpoint.

A review of the experiences of providing data access via an object store within these data workflows is presented, including the details of the improvements necessary for the transition to WebDav, used for most inter-site data movements, and enhancements for direct-IO file access, where the development and optimisation of buffering and range coalescence strategies is explored.

In addition to serving the requirements of LHC Run-3, preparations for Run-4 and for large astronomy experiments is underway. One example is with ROOT-based data formats, where the evolution from a TTree to RNTuple data structure provides an opportunity for storage providers to benchmark and optimise against this new format. A comparison of the current performance between data formats within ECHO is presented and the details of potential improvements presented.Speaker: Walder, James (RAL, STFC, UKRI) -

9

EOS Software Evolution Enabling LHC Run-3

EOS has been the main storage system at CERN for more than a decade, continuously improving in order to meet the ever evolving requirements of the LHC experiments and the whole physics user community. In order to satisfy the demands of LHC Run-3, in terms of storage performance and tradeoff between cost and capacity, EOS was enhanced with a set of new functionalities and features that we will detail in this paper.

First of all, we describe the use of erasure coded layouts in a large-scale deployment which enables an efficient use of available storage capacity, while at the same time providing end-users with better throughput when accessing their data. This new operating model implies more coupling between the machines in a cluster, which in turn leads to the next set of EOS improvements that we discuss, targeting I/O traffic shaping, better I/O scheduling policies and tagged traffic prioritization. Increasing the size of the EOS clusters to cope with experiment demands, means stringent constraints on the data integrity and durability that we addressed by a re-designed consistency check engine. Another focus area of EOS development was to minimize the operational load by making the internal operational procedures (draining, balancing or conversions) more robust and efficient, to allow managing easily multiple clusters and avoid possible scaling issues.

All these improvements available in the EOS 5 release series, are coupled with the new XRootD 5 framework which brings additional security features like TLS support and optimizations for large data transfers like page read and page write functionalities. Last but not least, the area of authentication/authorization methods has seen important developments by adding support for different types of bearer tokens that we will describe along with EOS specific token extensions. We conclude by highlighting potential areas of the EOS architecture that might require further developments or re-design in order to cope with the ever-increasing demands of our end-users.

Speaker: Mr Caffy, Cedric (CERN)

-

4

-

Track 2 - Online Computing: DAQ Frameworks Marriott Ballroom V-VI

Marriott Ballroom V-VI

Norfolk Waterside Marriott

235 East Main Street Norfolk, VA 23510Conveners: Antel, Claire (Universite de Geneve (CH)), Pantaleo, Felice (CERN)-

10

One year of LHCb triggerless DAQ: achievements and lessons learned.

The LHCb experiment is one of the four large experiments on the LHC at CERN. This forward spectrometer is designed to investigate differences between matter and antimatter by studying beauty and charm Physics. The detector and the entire DAQ chain have been upgraded, to profit from the higher luminosity delivered by the particle accelerator during Run3. The new DAQ system introduces a substantially different model for reading-out the detector data, which has not been used in systems of similar scale up to now. We designed a system capable of performing read-out, event-building and online reconstruction of the full event-rate produced by the LHC, without incurring the inefficiencies that a low-level hardware trigger would introduce. This design paradigm requires a DAQ system capable of ingesting an aggregated throughput of ~32 Tb/s, this poses significant technical challenges which have been solved by using both off-the-shelf solutions - like InfiniBand HDR - and customly developed FPGA-based electronics.

In this contribution, we will: provide an overview on the final system design, with a special focus on the event-building infrastructure; present quantitative measurements taken during the commissioning of the system; discuss the resiliency of the system concerning latency and fault tolerance; and provide feedback on the first year of operations of the system.Speaker: Pisani, Flavio (CERN) -

11

The new ALICE Data Acquisition system (O2/FLP) for LHC Run 3

ALICE (A Large Ion Collider Experiment) has undertaken a major upgrade during the LHC Long Shutdown 2. The increase in the detector data rates led to a hundredfold increase in the input raw data, up to 3.5 TB/s. To cope with it, a new common Online and Offline computing system, called O2, has been developed and put in production.

The O2/FLP system, successor of the ALICE DAQ system, implements the critical functions of detector readout, data quality control and operational services running in the CR1 data centre at the experimental site. Data from the 15 ALICE subdetectors are read out via 8000 optical links by 500 custom PCIe cards hosted in 200 nodes. It addresses novel challenges such as the continuous readout of the TPC detector while keeping compatibility with legacy detector front-end electronics.

This paper discusses the final architecture and design of the O2/FLP system and provides an overview of all its components, both hardware and software. It presents the selection process for the FLP nodes, the different commissioning steps and the main accomplishments so far. It will conclude with the challenges that lie ahead and how they will be addressed.

Speaker: Barroso, Vasco (CERN) -

12

Operational experience with the new ATLAS HLT framework for LHC Run 3

Athena is the software framework used in the ATLAS experiment throughout the data processing path, from the software trigger system through offline event reconstruction to physics analysis. For Run 3 data taking (which started in 2022) the framework has been reimplemented into a multi-threaded framework. In addition to having to be remodelled to work in this new framework, the ATLAS High Level Trigger (HLT) system has also been updated to rely on common solutions between online and offline software to a greater extent than in Run 2 (data taking between 2015-2018). We present the now operational new HLT system, reporting on how the system was tested, commissioned and optimised. In addition, we show developments that have been made in tools that are used to monitor and configure the HLT, some of which are designed from scratch for Run 3.

Speaker: Poreba, Aleksandra (CERN) -

13

INDRA-ASTRA

The INDRA-ASTRA project is part of the ongoing R&D on streaming readout and AI/ML at Jefferson Lab. In the interdisciplinary project, nuclear physicists and data scientists work towards a prototype for an autonomous, responsive detector system as a first step towards a fully autonomous experiment. In our presentation, we will present our method for autonomous calibration of DIS experiments using baseline calibrations and autonomous change detection via the multiscale method. We will demonstrate how the versatile multiscale method we have developed can be used to increase reliability of data and find and fix issues in near real time. We will show test results from a prototype detector and the running, large-scale SBS experiment at Jefferson Lab.

Speaker: Mr Fang, Ronglong -

14

ATLAS Trigger and Data Acquisition Upgrades for the High Luminosity LHC

The ATLAS experiment at CERN is constructing upgraded system

for the "High Luminosity LHC", with collisions due to start in

2029. In order to deliver an order of magnitude more data than

previous LHC runs, 14 TeV protons will collide with an instantaneous

luminosity of up to 7.5 x 10e34 cm^-2s^-1, resulting in much higher pileup and

data rates than the current experiment was designed to handle. While

this is essential to realise the physics programme, it presents a huge

challenge for the detector, trigger, data acquisition and computing.

The detector upgrades themselves also present new requirements and

opportunities for the trigger and data acquisition system.The design of the TDAQ upgrade comprises: a hardware-based low-latency

real-time Trigger operating at 40 MHz, Data Acquisition which combines

custom readout with commodity hardware and networking to deal with

4.6 TB/s input, and an Event Filter running at 1 MHz which combines

offline-like algorithms on a large commodity compute service

with the potential to be augmented by commercial accelerators .

Commodity servers and networks are used as far as possible, with

custom ATCA boards, high speed links and powerful FPGAs deployed

in the low-latency parts of the system. Offline-style clustering and

jet-finding in FPGAs, and accelerated track reconstruction are

designed to combat pileup in the Trigger and Event Filter

respectively.This contribution will report recent progress on the design, technology and

construction of the system. The physics motivation and expected

performance will be shown for key physics processes.Speaker: Negri, Andrea (CERN) -

15

From hyperons to hypernuclei online

The fast algorithms for data reconstruction and analysis of the FLES (First Level Event Selection) package of the CBM (FAIR/GSI) experiment were successfully adapted to work on the High Level Trigger (HLT) of the STAR (BNL) experiment online. For this purpose, a so-called express data stream was created on the HLT, which enabled full processing and analysis of the experimental data in real time.

With this express data processing, including online calibration, reconstruction of tracks and short-lived particles, as well as search and analysis of hyperons and hypernuclei, approximately 30% of all the data collected in 2019-2021 within the Beam Energy Scan (BES-II) program at energies down to 3 GeV has been processed on the free resources of the HLT computer farm.

A block diagram of the express data processing and analysis will be presented, particular features of the online calibration and application of the reconstruction algorithms, work under pile-up conditions at low collision energies in the fixed-target mode, and results of the real-time search for hyperons and hypernuclei up to $^5_\Lambda$He with 11.6$\cdot\sigma$ at HLT will be presented and discussed. The high quality of the express data enabled preliminary analysis results in several physics measurements.

Speaker: Prof. Kisel, Ivan (Goethe University Frankfurt am Main)

-

10

-

Track 3 - Offline Computing: Reconstruction Hampton Roads Ballroom VI

Hampton Roads Ballroom VI

Norfolk Waterside Marriott

235 East Main Street Norfolk, VA 23510Conveners: Bandieramonte, Marilena (University of Pittsburgh), Dr Srpimanobhas, Norraphat (Chulalongkorn University)-

16

Machine learning for ambiguity resolution in ACTS

The reconstruction of particle trajectories is a key challenge of particle physics experiments, as it directly impacts particle identification and physics performances while also representing one of the main CPU consumers of many high energy physics experiments. As the luminosity of particle collider increases, this reconstruction will become more challenging and resource intensive. New algorithms are thus needed to address these challenges efficiently. One potential step of track reconstruction is the ambiguity resolution. In this step, performed at the end of the tracking chain, we select which tracks candidates should to be kept and which ones need to be discarded. In the ATLAS experiment, for example, this is achieved by identifying fakes tracks, removing duplicates and determining via a Neural Network which hits should be shared by multiple tracks. The speed of this algorithm is directly driven by the number of track candidates, which can be reduced at the cost of some physics performance. Since this problem is fundamentally an issue of comparison and classification, we propose to use a machine learning based approach to the Ambiguity Resolution itself. Using a nearest neighbour search, we can efficiently determine which candidates belong to the same truth particle. Afterward, we can apply a Neural Network (NN) to compare those tracks and determine which ones are the duplicate and which one should be kept. Finally, another NN is applied to all the remaining candidates to identify which ones are fakes and remove those. This approach is implemented within A Common Tracking Software (ACTS) framework and tested on the Open Data Detector (ODD) a realistic virtual detector, similar to a future ATLAS one, to fully evaluate the potential of this approach.

Speaker: Allaire, Corentin (IJCLab) -

17

Generalizing mkFit and its Application to HL-LHC

MkFit is an implementation of the Kalman filter-based track reconstruction algorithm that exploits both thread- and data-level parallelism. In the past few years the project transitioned from the R&D phase to deployment in the Run-3 offline workflow of the CMS experiment. The CMS tracking performs a series of iterations, targeting reconstruction of tracks of increasing difficulty after removing hits associated to tracks found in previous iterations. MkFit has been adopted for several of the tracking iterations, which contribute to the majority of reconstructed tracks. When tested in the standard conditions for production jobs, speedups in track pattern recognition are on average of the order of 3.5x for the iterations where it is used (3-7x depending on the iteration). Multiple factors contribute to the observed speedups, including vectorization and a lightweight geometry description, as well as improved memory management and single precision. Efficient vectorization is achieved with both the icc and the gcc (default in CMSSW) compilers and relies on a dedicated library for small matrix operations, Matriplex, which has recently been released in a public repository. While the mkFit geometry description already featured levels of abstraction from the actual Phase-1 CMS tracker, several components of the implementations were still tied to that specific geometry. We have further generalized the geometry description and the configuration of the run-time parameters, in order to enable support for the Phase-2 upgraded tracker geometry for the HL-LHC and potentially other detector configurations. The implementation strategy and preliminary results with the HL-LHC geometry will be presented. Speedups in track building from mkFit imply that track fitting becomes a comparably time consuming step of the tracking chain. Prospects for an mkFit implementation of the track fit will also be discussed.

Speaker: Tadel, Matevz (UCSD) -

18

traccc - a close to single-source track reconstruction demonstrator for CPU and GPU

Despite recent advances in optimising the track reconstruction problem for high particle multiplicities in high energy physics experiments, it remains one of the most demanding reconstruction steps in regards to complexity and computing ressources. Several attemps have been made in the past to deploy suitable algorithms for track reconstruction on hardware accelerators, often by tailoring the algorithmic strategy to the hardware design. This led in certain cases to algorithmic compromises, and often came along with simplified descriptions of detector geometry, input data and magnetic field.

The traccc project is an R&D initiative of the ACTS common track reconstruction; it aims to provide a complete track reconstruction chain for both CPU and GPU architectures. Emphasis has been put on sharing as much common source code as possible while trying to avoid algorithmic and physics performance compromises. Within traccc, dedicated components have been developed that are usable on standard CPU and GPU architectures: an astraction layer for linear algebra operations that allows to customize the mathematical backend (algebra-plugin), a host and device memory management system (vecmem), a generic vector field library (covfie) for the magneic field description, and a geometry and propagation library (detray). They serve as building blocks of a fully developed track reconstruction demonstrator based on clustering (connected component labelling), space point formation, track seeding and combinatorial track finding.

We present the concepts and implementation of the traccc demonstrator and classify the physics and computational performance on selected hardware using the Open Data Detector in an scenario minicking the HL-LHC run condition. In addition, we give insight in our attempts to use different native language and portability solutions for GPUs, and summarize our main findings during the development of the entire traccc project.Speaker: Krasznahorkay, Attila (CERN) -

19

GPU-based algorithms for primary vertex reconstruction at CMS

The high luminosity expected from the LHC during the Run 3 and, especially, the HL-LHC of data taking introduces significant challenges in the CMS event reconstruction chain. The additional computational resources needed to treat this increased quantity of data surpass the expected increase in processing power for the next years. In order to fit the projected resource envelope, CMS is re-inventing its online and offline reconstruction algorithms, with their execution on CPU+GPU platforms in mind. Track clustering and primary vertex reconstruction accounts today about 10% of the reconstruction chain at 200 pileup and involves similar computations over hundreds to thousands of reconstructed tracks. This makes it a natural candidate for the development of a GPU-based algorithm that parallelizes it dividing the work in blocks. In this contribution we discuss the physics performance as well as the runtime performance of a new vertex clustering algorithm CMS developed for heterogeneous plarforms. We'll show that the physics results achieved are better than the current CMS vertexing algorithm in production, that the algorithm is up to 8 times faster on CPU and runs as well on GPUs. We will also discuss the plans for using this algorithm in production in Run 3 and for extending it to make use of timing information provided by the CMS Phase-2 MIP Timing Detector (MTD).

Speaker: Erice Cid, Carlos Francisco (Boston University (US)) -

20

The Exa.TrkX Project

Building on the pioneering work of the HEP.TrkX project [1], Exa.TrkX developed geometric learning tracking pipelines that include metric learning and graph networks. These end-to-end pipelines capture the relationships between spacepoint measurements belonging to a particle track. We tested the pipelines on simulated data from HL-LHC tracking detectors [2,5], Liquid Argon TPCs for neutrino experiments [3,8], and the straw tube tracker of the PANDA experiment[4]. The HL-LHC pipeline provides state-of-the-art tracking performance (Fig. 2), scales linearly with spacepoint density (Fig. 1), and has been optimized to run end-to-end on GP-GPUs, achieving a 20x speed-up with respect to the baseline implementation [6,9].

The Exa.TrkX geometric learning approach also has shown promise in less traditional tracking applications, like large-radius tracking for new physics searches at the LHC [7].

Exa.TrkX also contributed to developing and optimizing common data formats for ML training and inference targeting both neutrino detectors and LHC trackers.When applied to LArTPC neutrino experiments, the Exa.TrkX message-passing graph neural network classifies nodes, defined as the charge measurements or hits, according to the underlying particle type that produced them (Fig 3). Thanks to special 3D edges, our network can connect nodes within and across wire planes and achieve 94% accuracy with 96% consistency across wire planes [8].

From the very beginning, the Exa.TrkX project has functioned as a collaboration open beyond its three original institutions (CalTech, FNAL, and LBNL). We released the code associated with every publication and produced tutorials and quickstart examples to test our pipeline.

Eight US universities and six international institutions have contributed significantly to our research program and publications. The collaboration currently includes members of the ATLAS, CMS, DUNE, and PANDA experiments. Members of the FNAL muon g-2 experiment and CERN MUonE projects have tested the Exa.TrkX pipeline on their datasets.Exa.TrkX profits from multi-year partnerships with related research projects, namely the ACTS common tracking software, the ECP ExaLearn project, the NSF A3D3 institute, and the Fast ML Lab. More recently, as our pipeline matured and became applicable to more complex datasets, we started a partnership with HPE Lab, which uses our pipeline as a benchmark for its hyperparameter optimization and common metadata framework. NVIDIA (through the NERSC NESAP program) is evaluating the Exa.TrkX pipeline as an advanced use case for their R&D in Graph neural networks optimization.

At this stage of the project, a necessary focus of the Exa.TrkX team is on consolidation and dissemination of the results obtained so far. We are re-engineering the LHC pipeline to improve its modularity and usability across experiment frameworks. We aim to integrate our pipelines with online and offline reconstruction chains of neutrino and collider detectors and release a repository of production-quality HEP pattern recognition models that can be readily composed into an experiment-specific pipeline.

We are investigating heterogeneous graph networks to improve our pipelines' physics performance and make our models more easily generalizable [11]. Heterogeneity allows mixing and matching information from multiple detector geometries and types (strips vs. pixels, calorimeters vs. trackers vs. timing detectors, etc.).

We have demonstrated that it is possible to recover “difficult” tracks (e.g., tracks with a missing spacepoint) by using hierarchical graph networks [10]. Next, we need to scale these models to more challenging datasets, including full HL-LHC simulations.

We are also investigating how to parallelize our pipeline across multiple GPUs. Data parallelism for graph networks is an active research area in geometric learning. The unique setting of our problem, with large graphs that change structure with every event, makes parallelizing the inference step particularly challenging.

A future research project's ultimate goal would be to combine these four R&D threads into a generic pipeline for HEP pattern recognition that operates on heterogeneous data at different scales, from raw data to particles.[1 ]Farrell, S., Calafiura, P., et al. . Novel deep learning methods for track reconstruction. (2018). arXiv. https://doi.org/10.48550/arXiv.1810.06111

[2] Ju, X., Murnane, D., et al. Performance of a geometric deep learning pipeline for HL-LHC particle tracking. Eur. Phys. J. C 81, 876 (2021). https://doi.org/10.1140/epjc/s10052-021-09675-8

[3] Hewes, J., Aurisano, A., et al. Graph Neural Network for Object Reconstruction in Liquid Argon Time Projection Chambers. EPJ Web of Conferences 251, 03054 (2021).

https://doi.org/10.1051/epjconf/202125103054[4] Akram, A., & Ju, X. Track Reconstruction using Geometric Deep Learning in the Straw Tube Tracker (STT) at the PANDA Experiment. (2022) arXiv. https://doi.org/10.48550/arXiv.2208.12178

[5] Caillou, S., Calafiura, P. et al. ATLAS ITk Track Reconstruction with a GNN-based pipeline. (2022). ATL-ITK-PROC-2022-006. https://cds.cern.ch/record/2815578

[6] Lazar, A., Ju, X., et al. Accelerating the Inference of the Exa.TrkX Pipeline. (2022). arXiv. https://doi.org/10.48550/arXiv.2202.06929

[7] Wang, C., Ju, X., et al. Reconstruction of Large Radius Tracks with the Exa.TrkX pipeline. (2022). arXiv. https://doi.org/10.48550/arXiv.2203.08800

[8] Gumpula, K., et al., Graph Neural Network for Three Dimensional Object Reconstruction in Liquid Argon Time Projection Chambers. (2022). Presented at the Connecting the Dots 2022 workshop.

https://indico.cern.ch/event/1103637/contributions/4821839[9] Acharya, N., Liu, E., Lucas, A., Lazar, A. Optimizing the Exa.TrkX Inference Pipeline for Manycore CPUs. (2022). Presented at the Connecting the Dots 2022 workshop. https://indico.cern.ch/event/1103637/contributions/4821918

[10] Liu, R., Murnane, D., et al. Hierarchical Graph Neural Networks for Particle Reconstruction. (2022). Presented at the ACAT 2022 conference. https://indico.cern.ch/event/1106990/contributions/4996236/

[11] Murnane, D., Caillou, S.,. Heterogeneous GNN for tracking. (2022). Presented at the Princeton Mini-workshop on Graph Neural Networks for Tracking. https://indico.cern.ch/event/1128328/contributions/4900744

Speaker: Calafiura, Paolo (LBNL) -

21

Faster simulated track reconstruction in the ATLAS Fast Chain

The production of simulated datasets for use by physics analyses consumes a large fraction of ATLAS computing resources, a problem that will only get worse as increases in the instantaneous luminosity provided by the LHC lead to more collisions per bunch crossing (pile-up). One of the more resource-intensive steps in the Monte Carlo production is reconstructing the tracks in the ATLAS Inner Detector (ID), which takes up about 60% of the total detector reconstruction time [1]. This talk discusses a novel technique called track overlay, which substantially speeds up the ID reconstruction. In track overlay the pile-up ID tracks are reconstructed ahead of time and overlaid onto the ID tracks from the simulated hard-scatter event. We present our implementation of this track overlay approach as part of the ATLAS Fast Chain simulation, as well as a method for deciding in which cases it is possible to use track overlay in the reconstruction of simulated data without performance degradation.

[1] ATL-PHYS-PUB-2021-012 (60% refers to Run3, mu=50, including large-radius tracking, p11)

Speaker: Dr Leight, William (University of Massachusetts, Amherst)

-

16

-

Track 4 - Distributed Computing: Analysis Workflows, Modeling and Optimisation Marriott Ballroom II-III

Marriott Ballroom II-III

Norfolk Waterside Marriott

235 East Main Street Norfolk, VA 23510Conveners: Barreiro Megino, Fernando (Unive), Joshi, Rohini (SKAO)-

22

Distributed Machine Learning with PanDA and iDDS in LHC ATLAS

Machine learning has become one of the important tools for High Energy Physics analysis. As the size of the dataset increases at the Large Hadron Collider (LHC), and at the same time the search spaces become bigger and bigger in order to exploit the physics potentials, more and more computing resources are required for processing these machine learning tasks. In addition, complex advanced machine learning workflows are developed in which one task may depend on the results of previous tasks. How to make use of vast distributed CPUs/GPUs in WLCG for these big complex machine learning tasks has become a popular area. In this presentation, we will present our efforts on distributed machine learning in PanDA and iDDS (intelligent Data Delivery Service). We will at first address the difficulties to run machine learning tasks on distributed WLCG resources. Then we will present our implementation with DAG (Directed Acyclic Graph) and sliced parameters in iDDS to distribute machine learning tasks to distributed computing resources to execute them in parallel through PanDA. Next we will demonstrate some use cases we have implemented, such as Hyperparameter Optimization, Monte Carlo Toy confidence limits calculation and Active Learning. Finally we will describe some directions to perform in the future.

Speaker: Weber, Christian (Brookhaven National Laboratory) -

23

ATLAS data analysis using a parallelized workflow on distributed cloud-based services with GPUs

We present a new implementation of simulation-based inference using data collected by the ATLAS experiment at the LHC. The method relies on large ensembles of deep neural networks to approximate the exact likelihood. Additional neural networks are introduced to model systematic uncertainties in the measurement. Training of the large number of deep neural networks is automated using a parallelized workflow with distributed computing infrastructure integrated with cloud-based services. We will show an example workflow using the ATLAS PanDA framework integrated with GPU infrastructure from Google Cloud Platform. Numerical analysis of the neural networks is optimized with JAX and JIT. The novel machine-learning method and cloud-based parallel workflow can be used to improve the sensitivity of several other analyses of LHC data.

Speaker: Sandesara, Jay (University of Massachusetts, Amherst) -

24

Modelling Distributed Heterogeneous Computing Infrastructures for HEP Applications

Predicting the performance of various infrastructure design options in complex federated infrastructures with computing sites distributed over a wide area that support a plethora of users and workflows, such as the Worldwide LHC Computing Grid (WLCG), is not trivial. Due to the complexity and size of these infrastructures, it is not feasible to deploy experimental test-beds at large scales merely for the purpose of comparing and evaluating alternate designs.

An alternative is to simulate the behaviours of these systems based on realistic simulation models. This approach has been used successfully in the past to identify efficient and practical infrastructure designs for High Energy Physics (HEP). A prominent example is the Monarc simulation framework, which was used to study the initial structure of the WLCG. However, new simulation capabilities are needed to simulate large-scale heterogeneous infrastructures with complex networks as well as application behaviours that include various data access and caching patterns.

In this context, a modern tool to simulate high energy physics workloads that execute on distributed computing infrastructures based on the SimGrid and WRENCH simulation frameworks is outlined. Studies of its accuracy and scalability are presented using HEP as a case-study.

Speaker: Mr Horzela, Maximilian (Karlsruhe Institute of Technology) -

25

Digital Twin Engine Infrastructure in the interTwin Project

InterTwin is an EU-funded project that started on the 1st of September 2022. The project will work with domain experts from different scientific domains in building a technology to support digital twins within scientific research. Digital twins are models for predicting the behaviour and evolution of real-world systems and applications.

InterTwin will focus on employing machine-learning techniques to create and train models that are able to quickly and accurately reflect their physical counterparts in a broad range of scientific domains. The project will develop, deploy and “road harden” a blueprint for supporting digital twins on federated resources. For that purpose, it will support a diverse set of science use-cases, in the domains of radio telescopes (Meerkat), particle physics (CERN/LHC and Lattice-QCD), gravitational waves (Virgo), as well as climate research and environment monitoring (e.g. prediction of flooding and other extreme weather due to climate change). The ultimate goal is to provide a flexible infrastructure that can accommodate the needs of many additional scientific fields.

In the talk, we will present an overview of the interTwin project along with the corresponding Digital Twin Engine (DTE) architecture for federating the different, heterogeneous resources available to the scientific use-cases (storage, HPC, HTC, quantum) when training and exploitation of digital twins within the different scientific domains. The challenges faced when designing the architecture will be described, along with the solutions being developed to address them. interTwin is required to be interoperable with other infrastructures, including EuroHPC-based Destination Earth Initiative (DestinE) and an infrastructure for accessing Copernicus satellite data, C-SCALE. We will also present our strategy for making DTE available within the European Open Science Cloud (EOSC). The details of all such interoperability will also be presented.

Speaker: Fuhrmann, Patrick (DESY) -

26

IceCube SkyDriver - A SaaS Solution for Event Reconstruction using the Skymap Scanner

The IceCube Neutrino Observatory is a cubic kilometer neutrino telescope located at the geographic South Pole. To accurately and promptly reconstruct the arrival direction of candidate neutrino events for Multi-Messenger Astrophysics use cases, IceCube employs Skymap Scanner workflows managed by the SkyDriver service. The Skymap Scanner performs maximum-likelihood tests on individual pixels generated from the Hierarchical Equal Area isoLatitude Pixelation (HEALPix) algorithm. Each test is computationally independent, which allows for massive parallelization. This workload is distributed using the Event Workflow Management System (EWMS)—a message-based workflow management system designed to scale to trillions of pixels per day. SkyDriver orchestrates multiple distinct Skymap Scanner workflows behind a REST interface, providing an easy-to-use reconstruction service for real-time candidate, cataloged, and simulated events. Here, we outline the SkyDriver service technique and the initial development of EWMS.

Speaker: Evans, Ric (IceCube, University of Wisconsin-Madison) -

27

Analysis Grand Challenge benchmarking tests on selected sites

A fast turn-around time and ease of use are important factors for systems supporting the analysis of large HEP data samples. We study and compare multiple technical approaches.

This presentation will be about setting up and benchmarking the Analysis Grand Challenge (AGC) [1] using CMS Open Data. The AGC is an effort to provide a realistic physics analysis with the intent of showcasing the functionality, scalability and feature-completeness of the Scikit-HEP Python ecosystem.

I will present the results of setting up the necessary software environment for the AGC and benchmarking the analysis' runtime on various computing clusters: the institute SLURM cluster at my home institute, LMU Munich, a SLURM cluster at LRZ (WLCG Tier-2 site) and the analysis facility Vispa [2], operated by RWTH Aachen.

Each site provides slightly different software environments and modes of operation which poses interesting challenges on the flexibility of a setup like that intended for the AGC.

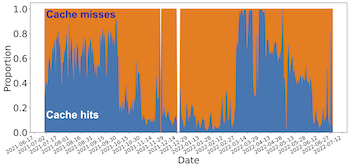

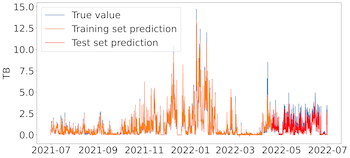

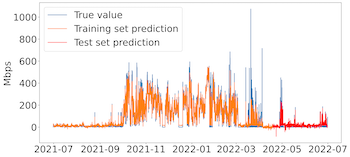

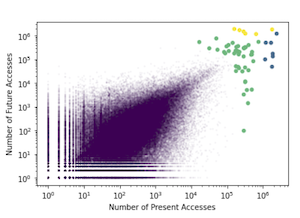

Comparing these benchmarks to each other also provides insights about different storage and caching systems. At LRZ and LMU we have regular Grid storage (HDD) as well as and SSD-based XCache server and on Vispa a sophisticated per-node caching system is used.[1] https://github.com/iris-hep/analysis-grand-challenge

[2] https://vispa.physik.rwth-aachen.de/Speaker: Koch, David (LMU)

-

22

-

Track 5 - Sustainable and Collaborative Software Engineering: Sustainable Languages and Architectures Chesapeake Meeting Room

Chesapeake Meeting Room

Norfolk Waterside Marriott

235 East Main Street Norfolk, VA 23510Conveners: Sailer, Andre (CERN), Sexton, Elizabeth (FNAL)-

28

Is Julia ready to be adopted by HEP?

The Julia programming language was created 10 years ago and is now a mature and stable language with a large ecosystem including more than 8,000 third-party packages. It was designed for scientific programming to be a high-level and dynamic language as Python is, while achieving runtime performances comparable to C/C++ or even faster. With this, we ask ourselves if the Julia language and its ecosystem is ready now for its adoption by the High Energy Physics community. We will report on a number of investigations and studies of the Julia language that have been done for various representative HEP applications, ranging from computing intensive initial data processing of experimental data and simulation, to final interactive data analysis and plotting. Aspects of collaborative code development of large software within a HEP experiment has also been investigated: scalability with large development teams, continuous integration and code test, code reuse, language interoperability to enable a adiabatic migration of packages and tools, software installation and distribution, training of the community, benefit from development from industry and academia from other fields.

Speaker: Gál, Tamás (Erlangen Centre for Astroparticle Physics) -

29

Polyglot Jet Finding

The evaluation of new computing languages for a large community, like HEP, involves comparison of many aspects of the languages' behaviour, ecosystem and interactions with other languages. In this paper we compare a number of languages using a common, yet non-trivial, HEP algorithm: the tiled $N^2$ clustering algorithm used for jet finding. We compare specifically the algorithm implemented in Python, using numpy, Julia and Rust, with respect to the reference implementation in C++, from Fastjet. As well as the speed of the implementation we describe the ergonomics of the language for the coder, as well as the efforts required to achieve the best performance, which can directly impact on code readability and sustainability.

Speaker: Stewart, Graeme (CERN) -

30

The ATLAS experiment software on ARM

With an increased dataset obtained during the Run-3 of the LHC at CERN and the even larger expected increase of the dataset by more than one order of magnitude for the HL-LHC, the ATLAS experiment is reaching the limits of the current data processing model in terms of traditional CPU resources based on x86_64 architectures and an extensive program for software upgrades towards the HL-LHC has been set up. The ARM architecture is becoming a competitive and energy efficient alternative. Some surveys indicate its increased presence in HPCs and commercial clouds, and some WLCG sites have expressed their interest. Chip makers are also developing their next generation solutions on ARM architectures, sometimes combining ARM and GPU processors in the same chip. Therefore it is important that the Athena software embraces the change and is able to successfully exploit this architecture.

We report on the successful port of the ATLAS experiment offline and online software framework Athena to ARM and the successful physics validation of simulation workflows. For this we have set up an ATLAS Grid site using ARM compatible middleware and containers on Amazon Web Services (AWS) ARM resources. The ARM version of Athena is fully integrated in the regular software build system and distributed like default software releases. In addition, the workflows have been integrated into the HepScore benchmark suite which is the planned WLCG wide replacement of the HepSpec06 benchmark used for Grid site pledges. In the overall porting process we have used resources on AWS, Google Cloud Platform (GCP) and CERN. A performance comparison of different architectures and resources will be discussed.

Speaker: Elmsheuser, Johannes (Brookhaven National Laboratory) -

31

Multilanguage Frameworks

High Energy Physics software has been a victim of the necessity to choose one implementation language as no really usable multi-language environment existed. Even a co-existence of two languages in the same framework (typically C++ and Python) imposes a heavy burden on the system. The role of different languages was generally limited to well encapsulated domains (like Web applications, databases, graphics), with very limited connection to the central framework.

The new development in the domain of the compilers and run-time environments has enabled ways for creating really multilanguage frameworks, with seamless, user-friendly and high-performance inter-operation of many languages, which traditionally live in disconnected domains (like C-based languages vs JVM languages or Web languages).

Various possibilities and strategies for creation of the true multi-language frameworks will be discussed, emphasizing their advantages and possible road blocks.

A prototype of massively multilanguage application will be presented, using very wide spectrum of languages working together (C++, Python, JVM languages, JavaScript,...). Each language will be used in the domain where it offers a strong comparative advantage (speed, user-friendliness, availability of third-party libraries and tools, graphical and web capabilities).

The performance gain from the modern multi-language environments will be also demonstrated, as well as gains in the overall memory footprint.

Possibilities of converting existing HEP frameworks into multilanguage environments will be discussed in concrete examples and demonstrations.

A real life example of widely multilanguage environment will be demonstrated on the case of the multi-language access to the data storage of the LSST telescope Fink project.

Speaker: Hrivnac, Julius (IJCLab) -

32

User-Centered Design for the Electron-Ion Collider

Software and computing are an integral part of our research. According to the survey for the “Future Trends in Nuclear Physics Computing” workshop in September 2020, students and postdocs spent 80% of their time on the software and computing aspects of your research. For the Electron-Ion Collider, we are looking for ways to make software (and computing) "easier" to use. All scientists of all levels worldwide should be empowered to participate in Electron-Ion Collider simulations and analyses actively.

In this presentation, we will summarize our work on user-centered design for the Electron-Ion Collider. We have collected information on the community's specific software tools and practices on an annual basis. We have also organized focus group discussions with the broader community and developed user archetypes based on the feedback from the focus groups. The user archetypes represent a common class of users and provide input to software developers as to which users they are writing software for and help with structuring documentation.

Speaker: Diefenthaler, Markus (Jefferson Lab) -

33

Train to Sustain

The HSF/IRIS-HEP Software Training group provides software training skills to new researchers in High Energy Physics (HEP) and related communities. These skills are essential to produce high-quality and sustainable software needed to do the research. Given the thousands of users in the community, sustainability, though challenging, is the centerpiece of its approach. The training modules are open source and collaborative. Different tools and platforms, like GitHub, enable technical continuity, collaboration and nurture the sense to develop software that is reproducible and reusable. This contribution describes these efforts.

Speaker: Malik, Sudhir (University of Puerto Rico Mayaguez)

-

28

-

Track 6 - Physics Analysis Tools: Statistical Inference and Fitting Hampton Roads Ballroom VIII

Hampton Roads Ballroom VIII

Norfolk Waterside Marriott

235 East Main Street Norfolk, VA 23510Conveners: Hageboeck, Stephan (CERN), Held, Alexander (University of Wisconsin–Madison)-

34

RooFit's new heterogeneous computing backend

RooFit is a library for building and fitting statistical models that is part of ROOT. It is used in most experiments in particle physics, in particular, the LHC experiments. Recently, the backend that evaluates the RooFit likelihood functions was rewritten to support performant computations of model components on different hardware. This new backend is referred to as the "batch mode". So far, it supports GPUs with CUDA and also the vectorizing instructions on the CPU. With ROOT 6.28, the new batch mode is feature-complete and speeds up all use cases targeted by RooFit, even on a single CPU thread. The GPU backend further reduces the likelihood evaluation time, particularly for unbinned fits to large datasets. The speedup is most significant when all likelihood components support GPU evaluation. Still, if this is not the case, the backend will optimally distribute the computation on the CPU and GPU to guarantee a speedup.

RooFit is a very extensible library with a vast user interface to inject behavior changes at almost every point of the likelihood calculation, which the new heterogeneous computation backend must handle. This presentation discusses our approach and lessons learned when facing this challenge. The highlight of this contribution is showcasing the performance improvements for benchmark examples, fits from the RooFit tutorials, and real-world fit examples from LHC experiments. We will also elaborate on how users can implement GPU support for their custom probability density functions and explain the current limitations and future developments.

Speaker: Rembser, Jonas (CERN) -

35

Making Likelihood Calculations Fast: Automatic Differentiation Applied to RooFit

With the growing datasets of current and next-generation High-Energy and Nuclear Physics (HEP/NP) experiments, statistical analysis has become more computationally demanding. These increasing demands elicit improvements and modernizations in existing statistical analysis software. One way to address these issues is to improve parameter estimation performance and numeric stability using automatic differentiation (AD). AD's computational efficiency and accuracy is superior to the preexisting numerical differentiation techniques and offers significant performance gains when calculating the derivatives of functions with a large number of inputs, making it particularly appealing for statistical models with many parameters. For such models, many HEP/NP experiments use RooFit, a toolkit for statistical modeling and fitting that is part of ROOT.

In this talk, we report on the effort to support the AD of RooFit likelihood functions. Our approach is to extend RooFit with a tool that generates overhead-free C++ code for a full likelihood function built from RooFit functional models. Gradients are then generated using Clad, a compiler-based source-code-transformation AD tool, using this C++ code. We present our results from applying AD to the entire minimization pipeline and profile likelihood calculations of several RooFit and HistFactory models at the LHC-experiment scale. We show significant reductions in calculation time and memory usage for the minimization of such likelihood functions. We also elaborate on this approach's current limitations and explain our plans for the future.

This contribution combines R&D expertise from computer science applied at scale for HEP/NP analysis: we demonstrate that source-transformation-based AD can be incorporated into complex, domain-specific codes such as RooFit to give substantial performance and scientific capability improvements.

Speaker: Singh, Garima (Princeton University (US)/CERN) -

36

Build-a-Fit: RooFit configurable parallelization and fine-grained benchmarking tools for order of magnitude speedups in your fits

RooFit is a toolkit for statistical modeling and fitting, presented first at CHEP2003, and together with RooStats is used for measurements and statistical tests by most experiments in particle physics, particularly the LHC experiments.

As the LHC program progresses, physics analyses become more ambitious and computationally more demanding, with fits of hundreds of data samples to joint models with over a thousand parameters no longer an exception. While such complex fits can be robustly performed in RooFit, they may take many hours on a single CPU, significantly impeding the ability of physicists to interactively understand, develop and improve them. Here were present recent RooFit developments to address this, focusing on significant improvements of wall-time performance of complex fits.

A complete rewrite of the internal back-end of the RooFit likelihood calculation code in ROOT 6.28 now allows to massively parallelize RooFit likelihood fits in two ways. Gradients that are normally serially calculated inside MINUIT, and which dominate the total fit time, are now calculated in a parallel way inside RooFit. Furthermore, calculations of the likelihood in serial phases of the minimizer (initialization and gradient descent steps) are also internally parallelized. No modification of any user code is required to take advantage of these features.

A key to achieving good scalability for these parallel calculations is close to perfect load balancing over the workers, which is complicated by the fact that for realistic complex fit models the calculations to parallelize cannot be split in components of equal or even comparable size. As part of this update, instruments have been added to RooFit for extensive performance monitoring that allow the user to understand the effect of algorithmic choices in task scheduling and mitigate performance bottlenecks.

We will show that that with a new dynamic scheduling strategy and a strategic choice of ordering derivative calculations excellent scalability can be achieved, resulting in an order-of-magnitude wall-time speedups for complex realistic LHC fits such as the ATLAS Run-2 combined Higgs interpretation.

Speaker: Wolffs, Zef (Nikhef) -

37

New developments in Minuit2

Minuit is a program implementing a function minimisation algorithm written at CERN more than 50 years ago. It is still used by almost all statistical analysis in High Energy Physics to find optimal likelihood and best parameter values. A new version, Minuit2, has been re-implemented the original algorithm in C++ a few years ago and it is provided as a ROOT library or a standalone C++ module. It is also available as a Python package, IMinuit.

This new version has been recently improved by adding some new features. These include support for external gradients and hessian, allowing the use of Automatic Differentiation techniques or parallel computation of the gradients and the addition of new minimisation algorithms such as BFGS and Fumili. We will present an overview of the new implementation showing the new added features and we will as well present a comparison with other existing minimisation packages, available in C++ or in the Python scientific ecosystem.Speaker: Moneta, Lorenzo (CERN) -

38

Bayesian methodologies in particle physics with pyhf

Collider physics analyses have historically favored Frequentist statistical methodologies, with some exceptions of Bayesian inference in LHC analyses through use of the Bayesian Analysis Toolkit (BAT). We demonstrate work towards an approach for performing Bayesian inference for LHC physics analyses that builds upon the existing APIs and model building technology of the pyhf and PyMC Python libraries and leverages pyhf’s automatic differentiation and hardware acceleration through its JAX computational backend. This approach presents a path toward unified APIs in pyhf that allow for users to choose a Frequentist or Bayesian approach towards statistical inference, leveraging their respective strengths as needed, without having to transition between using multiple libraries or fall back to using pyhf with BAT through the Julia programming language PyCall package. Examples of Markov chain Monte Carlo implementations using Metropolis-Hastings and Hamiltonian Monte Carlo are presented.

Speaker: Horstmann, Malin (TU Munich / Max Planck Institute for Physics) -

39

A multidimensional, event-by-event, statistical weighting procedure for signal to background separation

Many current analyses in nuclear and particle physics are in search for signals that are encompassed by irreducible background events. These background events, entirely surrounding a signal of interest, would lead to inaccurate results when extracting physical observables from the data, due to the inability to reduce the signal to background ratio using any type of selection criteria. By looking at a data set in multiple dimensions, the phase space of a desired reaction can be characterized by a set of coordinates, where a subset of these coordinates (known as reference coordinates) contains a distinguishable distribution where the signal and background can easily be determined. The approach then uses the space defined by the non-reference coordinates, to determine the k-nearest neighbors of an event, where these events can then be fit on the reference coordinates of these k-nearest neighbors (using an unbinned maximum likelihood fit, etc.). From the fit, a quality factor can be defined for each event in the data set that states the probability that it originates from the actual signal of interest. The unique aspect of this procedure requires no a priori information of the signal or background distributions within the phase space in the desired reaction. This and many other useful properties for this statistical weighting procedure makes this method more advantageous in certain analyses than other methods. A detailed overview of this procedure will be shown along with examples using Monte Carlo and GlueX data.

Speaker: Baldwin, Zachary (Carnegie Mellon University)

-

34

-

Track 7 - Facilities and Virtualization: Dynamic Provisioning and Anything-As-A-Service Marriott Ballroom IV

Marriott Ballroom IV

Norfolk Waterside Marriott

235 East Main Street Norfolk, VA 23510Conveners: Kishimoto, Tomoe (KEK), Weitzel, Derek (University of Nebraska-Lincoln)-

40

Securing an Open and Trustworthy Ecosystem for Research Infrastructure and Applications (SOTERIA)

Managing a secure software environment is essential to a trustworthy cyberinfrastructure. Software supply chain attacks may be a top concern for IT departments, but they are also an aspect of scientific computing. The threat to scientific reputation caused by problematic software can be just as dangerous as an environment contaminated with malware. The issue of managing environments affects any individual researcher performing computational research but is more acute for multi-institution scientific collaborations, such as high energy physics experiments, as they often preside over complex software stacks and must manage software environments across many distributed computing resources. We discuss a new project, Securing an Open and Trustworthy Ecosystem for Research Infrastructure and Applications (SOTERIA), to provide the HEP community with a container registry service and provide additional capabilities to assist with vulnerability assessment, authorship and provenance, and distribution. This service is currently being used to deliver containers for a wide range of the OSG Fabric of Services, the Coffea-Casa analysis facility, and the Analysis Facility at the University of Chicago; we discuss both the functionality it currently provides and the operational experiences of running a critical service for scientific cyberinfrastructure.

Speaker: Bockelman, Brian (Morgridge Institute for Research) -

41

Modular toolsets for integrating HPC clusters in experiment control systems

New particle/nuclear physics experiments require a massive amount of computing power that is only achieved by using high performance clusters directly connected to the data acquisition systems and integrated into the online systems of the experiments. However, integrating an HPC cluster into the online system of an experiment means: Managing and synchronizing thousands of processes that handle the huge throughput. In this work, modular components that can be used to build and integrate such a HPC cluster in the experiment control systems (ECS) will be introduced.

The Online Device Control library (ODC) [1] in combination with the Dynamic Deployment System (DDS) [2, 3] and FairMQ [4] message queuing library offers a sustainable solution for integrating HPC cluster controls into an ECS.

DDS as part of the ALFA framework [5] is a toolset that automates and significantly simplifies a dynamic deployment of user-defined processes and their dependencies on any resource management system (RMS) using a given process graph (topology). Where ODC is the tool to control and communicate with a topology of FairMQ processes using DDS. ODC is designed to act as a broker between a high level experiment control system and a low level task management system e.g.: DDS.

In this presentation the architecture of both DDS and ODC will be discussed, as well as the design decisions taken based on the experience gained of using these tools on production by the ALICE experiment at CERN to deploy and control thousands of processes (tasks) on the Event Processing Nodes cluster (EPN) during Run3 as a part of the ALICE O2 software ecosystem [6].

References:

1. FairRootGroup, “ODC git repository”, Last accessed 14th of November 2022: https://github.com/FairRootGroup/ODC

2. FairRootGroup, “DDS home site”, Last accessed 14th of November 2022: http://dds.gsi.de

3. FairRootGroup, “DDS source code repository”, Last accessed 14th of November 2022: https://github.com/FairRootGroup/DDS

4. FairMQ, “FairMQ git repository”, Last accessed 14th of November 2022: https://github.com/FairRootGroup/FairMQ

5.https://indico.gsi.de/event/2715/contributions/11355/attachments/8580/10508/ALFA_Fias.pdf

5. ALICE Technical Design Report (2nd of June 2015), Last accessed 14th of November: https://cds.cern.ch/record/2011297/files/ALICE-TDR-019.pdfSpeaker: Dr Al-Turany, Mohammad (GSI) -

42

Federated Heterogenous Compute and Storage Infrastructure for the PUNCH4NFDI Consortium

PUNCH4NFDI, funded by the Germany Research Foundation initially for five years, is a diverse consortium of particle, astro-, astroparticle, hadron and nuclear physics embedded in the National Research Data Infrastructure initiative.

In order to provide seamless and federated access to the huge variaty of compute and storage systems provided by the participating communities covering their very diverse needs, the Compute4PUNCH and Storage4PUNCH concepts have been developed. Both concepts comprise state-of-the-art technolgies such as a token-based AAI for standardised access to compute and storage resources. The community supplied heterogenous HPC, HTC and Cloud compute resources are dynamically and transparently integrated into one federated HTCondor based overlay batch system using the COBaLD/TARDIS resource meta-scheduler. Traditional login nodes and a JupyterHub provide entry points into the entire landscape of available compute resources, while container technologies and the CERN Virtual Machine File System (CVMFS) ensure a scalable provisioning of community specific software environments. In Storage4PUNCH, community supplied storage systems mainly based on dCache or XRootD technology are being federated in a common infrastructure employing methods that are well established in the wider HEP community. Furthermore existig technologies for caching as well as metadata handling are being evaluated with the aim for a deeper integration. The combined Compute4PUNCH and Storage4PUNCH environment will allow a large variety of researchers to carry out resource-demanding analysis tasks.

In this contribution we will present the Compute4PUNCH and Storage4PUNCH concepts, the current status of the developments as well as first experiences with scientific applications being executed on the available prototypes.

Speaker: Dr Giffels, Manuel (Karlsruher Institut für Technologie (KIT)) -

43

Progress on cloud native solution of Machine Learning as Service for HEP

Nowadays Machine Learning (ML) techniques are successfully used in many areas of High-Energy Physics (HEP) and will play a significant role also in the upcoming High-Luminosity LHC upgrade foreseen at CERN, when a huge amount of data will be produced by LHC and collected by the experiments, facing challenges at the exascale. To favor the usage of ML in HEP analyses, it would be useful to have a service allowing to perform the entire ML pipeline (in terms of reading the data, processing data, training a ML model, and serving predictions) directly using ROOT files of arbitrary size from local or remote distributed data sources. The MLaaS4HEP solution we have already proposed aims to provide such kind of service and to be HEP experiment agnostic. Recently new features have been introduced, such as the possibility to provide pre-processing operations, defining new branches, and applying cuts. To provide users with a real service and to integrate it into the INFN Cloud, we started working on MLaaS4HEP cloudification. This would allow to use cloud resources and to work in a distributed environment. In this work, we provide updates on this topic and discuss a working prototype of the service running on INFN Cloud. It includes an OAuth2 proxy server as authentication/authorization layer, a MLaaS4HEP server, an XRootD proxy server for enabling access to remote ROOT data, and the TensorFlow as a Service (TFaaS) service in charge of the inference phase. With this architecture a HEP user can submit ML pipelines, after being authenticated and authorized, using local or remote ROOT files simply using HTTP calls.

Speaker: Giommi, Luca (INFN Bologna) -

44

Demand-driven provisioning of Kubernetes-like resource in OSG

The OSG-operated Open Science Pool is an HTCondor-based virtual cluster that aggregates resources from compute clusters provided by several organizations. A user can submit batch jobs to the OSG-maintained scheduler, and they will eventually run on a combination of supported compute clusters without any further user action. Most of the resources are not owned by, or even dedicated to OSG, so demand-based dynamic provisioning is important for maximizing usage without incurring excessive waste.

OSG has long relied on GlideinWMS for most of its resource provisioning needs, but is limited to resources that provide a Grid-compliant Compute Entrypoint. To work around this limitation, the OSG software team had developed a pilot container that resource providers could use to directly contribute to the OSPool. The problem of that approach is that it is not demand-driven, relegating it to backfill scenarios only.

To address this limitation, a demand-driven direct provisioner of Kubernetes resources has been developed and successfully used on the PRP. The setup still relies on the OSG-maintained backfill container images, it just automates the provisioning matchmaking and successive requests. That provisioner has also been recently extended to support Lancium, a green computing cloud provider with a Kubernetes-like proprietary interface. The provisioner logic had been intentionally kept very simple, making this extension a low cost project.

Both PRP and Lancium resources have been provisioned exclusively using this mechanism for almost a year with great results.Speakers: Andrijauskas, Fabio, Schultz, David (IceCube, University of Wisconsin-Madison)

-

40

-

Track 8 - Collaboration, Reinterpretation, Outreach and Education: Tailored Collaboration and Training Initiatives Marriott Ballroom I

Marriott Ballroom I

Norfolk Waterside Marriott

235 East Main Street Norfolk, VA 23510Conveners: Hernandez Villanueva, Michel (DESY), Lange, Clemens (Paul Scherrer Institut)-

45

Involving the new generations in Fermilab endeavours

Since 1984 the Italian groups of the Istituto Nazionale di Fisica Nucleare (INFN) and Italian Universities, collaborating with the

DOE laboratory of Fermilab (US) have been running a two-month summer training program for Italian university students. While

in the first year the program involved only four physics students of the University of Pisa, in the following years it was extended

to engineering students. This extension was very successful and the engineering students have been since then extremely well

accepted by the Fermilab Technical, Accelerator and Scientific Computing Division groups. Over the many years of its existence,

this program has proven to be the most effective way to engage new students in Fermilab endeavours. Many students have

extended their collaboration with Fermilab with their Master Thesis and PhD.

Since 2004 the program has been supported in part by DOE in the frame of an exchange agreement with INFN. Over its almost

40 years of history, the program has grown in scope and size and has involved more than 550 Italian students from more than

20 Italian Universities, A number of Institutes of Research, including ASI and INAF in Italy, and the ISSNAF Foundation in the

US, have provided additional financial support. Since the program does not exclude appropriately selected non-italian students,

a handful of students of European and non-European Universities were also accepted in the years.

Each intern is supervised by a Fermilab Mentor responsible for performing the training program. Training programs spanned

from Tevatron, CMS, Muon (g-2), Mu2e and Short Baseline Neutrino Experiments and DUNE design and experimental data

analysis, development of particle detectors (silicon trackers, calorimeters, drift chambers, neutrino and dark matter detectors),

design of electronic and accelerator components, development of infrastructures and software for tera-data handling, research

on superconductive elements and on accelerating cavities, theory of particle accelerators

Since 2010, within an extended program supported by the Italian Space Agency and the Italian National Institute of Astrophysics,

a total of 30 students in physics, astrophysics and engineering have been hosted for two months in summer at US space

science Research Institutes and laboratories.

In 2015 the University of Pisa included these programs within its own educational programs. Accordingly, Summer School

students are enrolled at the University of Pisa for the duration of the internship and are identified and ensured as such. At the

end of the internship the students are required to write summary reports on their achievements. After positive evaluation by a

University Examining Board, interns are acknowledged 6 ECTS credits for their Diploma Supplement.

Information on student recruiting methods, on training programs of recent years and on final student's evaluation process at

Fermilab and at the University of Pisa will be given in the presentation.

In the ears 2020 and 2021 the Program has been canceled due to the persisting effects of the sanitary emergency which